How to download datasets from IPython Shell in Datacamp

This blog post will show you how to download datasets from DataCamp Ipython Shell/Console in a web browser.

TL;DR: Use the python script to extract the link to download the targeting datasets from IPython Shell.

Motivation: While studying DataCamp courses, there were so many times that I could not reproduce the course results in my local environment with the provided datasets, which was quite frustrating. I had tried to reach out to the support team, but the results were not satisfactory. After spending hours searching on Stackoverflow here and there, then trying and failing numerous times with several scripts, I could finally manage to download the dataset that the course was using.

I decided to share my workaround here. Hopefully, it could help everyone who is facing the same problems.

I have summarized how I did it in the following steps:

Quick EDA

Run the following command to take do a quick check on the data

print(df.head())

y

2013-01-01 1.624345

2013-01-02 -0.936625

2013-01-03 0.081483

2013-01-04 -0.663558

2013-01-05 0.738023

then a quick look with df.info()

print(df.info())

<class 'pandas.core.frame.DataFrame'>

DatetimeIndex: 1000 entries, 2013-01-01 to 2015-09-27

Freq: D

Data columns (total 1 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 y 1000 non-null float64

dtypes: float64(1)

memory usage: 55.6 KB

None

Okay, the dataset has 1000 rows, with Freq set as Date, Dtype = float64, etc.

Here are the first 5 rows of data:

2013-01-01 1.624345

2013-01-02 -0.936625

2013-01-03 0.081483

2013-01-04 -0.663558

2013-01-05 0.738023

We will remember this, so we can check later once we download the dataset to our local environment.

Save the dataset to the cloud storage

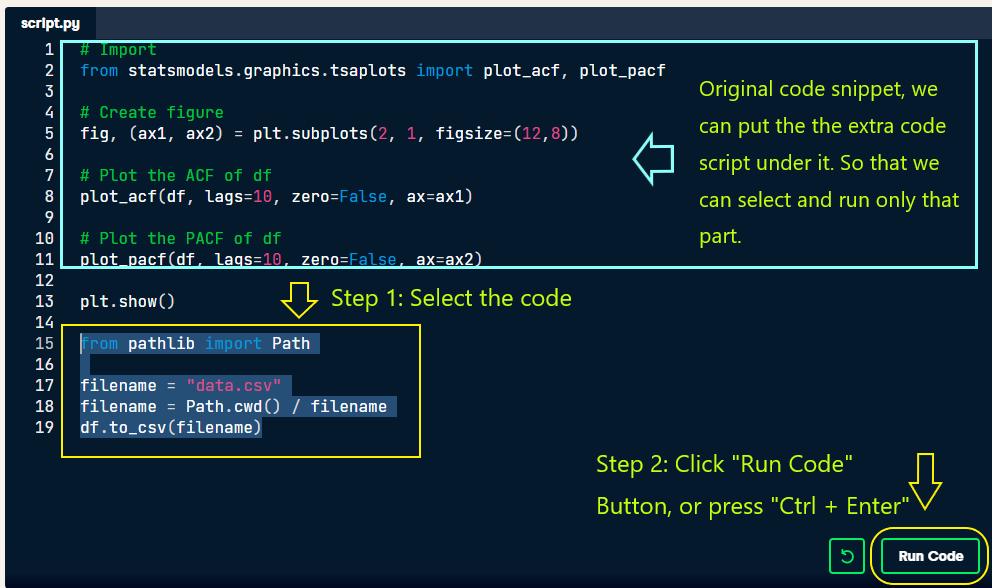

Next, we will save the dataset from the DataFrame to the cloud storage. Try the following code in the script.py.

CODE:

# Get the filename fullpath

from pathlib import Path

filename = "data.csv"

filename = Path.cwd() / filename

df.to_csv(filename)

Check the result by typing the following commands to the IPython Shell:

!pwd

!ls

Encode the file data and an generate HTML link

Next, we run the following code, to generate HTML data.

import base64

import pandas as pd

from IPython.display import HTML

in_file = open(filename, "rb")

csv = in_file.read()

# print(csv) # Uncomment this if you want to check the csv content

in_file.close()

b64 = base64.b64encode(csv)

payload = b64.decode()

html = '<a download="{filename}" href="data:text/csv;base64,{payload}" target="_blank">{title}</a>'

html = html.format(payload=payload,title=title,filename=filename)

# Print the link

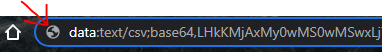

print("data:text/csv;base64,{}".format(payload))

Result:

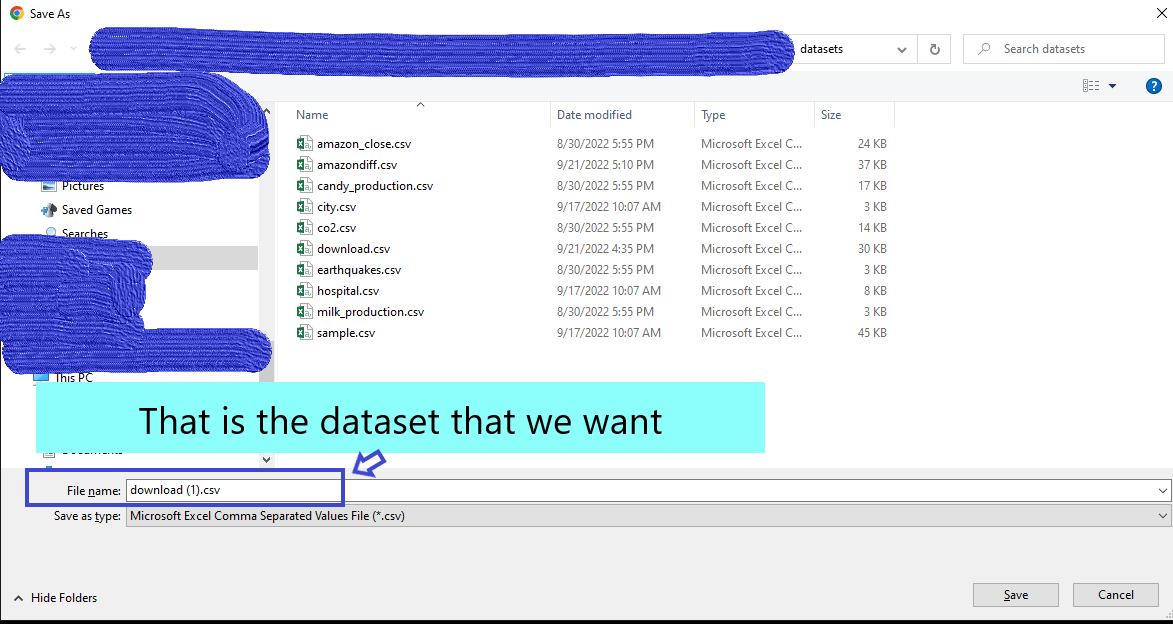

Paste the extracted link in the Shell output to a new tab in the browser.

Voila! That is the dataset that we want.

Check the downloaded dataset in your local Jupyter notebook

Use this code:

df = pd.read_csv('./datasets/download.csv', parse_dates=True, index_col=[0])

df = df.asfreq('d') # Set the frequent as DATE

df.info()

then

df.head()

Results:

As you can see, the downloaded dataset looks exactly like what we saw in the quick EDA section. You can now freely run your experiment locally with no worries about preprocessing the raw datasets.

Full Code

Here is the full code of the post. Use this script in the script.py, you should be able to download the targeted datasets.

import base64

import pandas as pd

from IPython.display import HTML

from pathlib import Path

def create_download_link( df, title = "Download CSV file", filename = "data.csv"):

filename = Path.cwd() / filename

df.to_csv(filename)

in_file = open(filename, "rb")

csv = in_file.read()

# print(csv) # Uncomment this if you want to check the csv content

in_file.close()

b64 = base64.b64encode(csv)

payload = b64.decode()

html = '<a download="{filename}" href="data:text/csv;base64,{payload}" target="_blank">{title}</a>'

html = html.format(payload=payload,title=title,filename=filename)

# print the link

print("data:text/csv;base64,{}".format(payload))

return HTML(html)

create_download_link(df)

print(df.info())

BONUS: Here is another version of the code. It was modified to help downloading numpy arrays.

import base64

import pandas as pd

import numpy as np

from IPython.display import HTML

from pathlib import Path

def create_download_link( numpy_arr, title = "Download CSV file", filename = "data.csv"):

# CONVERT numpy array to pandas frame.

df = pd.DataFrame(numpy_arr)

filename = Path.cwd() / filename

df.to_csv(filename)

in_file = open(filename, "rb")

csv = in_file.read()

# print(csv) # Uncomment this if you want to check the csv content

in_file.close()

b64 = base64.b64encode(csv)

payload = b64.decode()

html = '<a download="{filename}" href="data:text/csv;base64,{payload}" target="_blank">{title}</a>'

html = html.format(payload=payload,title=title,filename=filename)

# print the link

print("data:text/csv;base64,{}".format(payload))

return HTML(html)

create_download_link(new_inputs)

Then we can reload the downloaded numpy array as follows.

new_inputs_df = pd.read_csv('./datasets/data.csv', index_col=[0])

new_inputs = new_inputs_df.to_numpy()

new_inputs.reshape((100,)) # CONVERT it back to its original shape.

OTHER NOTE: To see the pre-defined function from the scripts.py use the following script:

import inspect

source = inspect.getsource(pre_defined_fucntion_name)

print(source)

UPDATE (2022 Oct 13)

In the above methods, it seems like that base64.b64encode create a TOO LONG string data when encoding the large table (Ex:Tables have more than 6500 rows). The download link is too long, and therefore nolonger possible to be loaded by the browser. The data will be lost if we use the above method. It will be trickier to download the data in these cases without encoding data with base64. One can think of using some string compression method to make the string shorter, but that is not feasible when huge tables present.

As an alternative, we can download the csv data as string in bytes format by:

- Manually copying data from the terminal

- Save it as .txt format

- Finally, reload it back to the original form.

In details:

- STEP 1: Download the data as "data.txt" file.

print(numpy_arr)

import pandas as pd

from pathlib import Path

def create_download_link(numpy_arr, filename = "data.csv"):

# CONVERT numpy array to pandas frame.

df = pd.DataFrame(numpy_arr)

filename = Path.cwd() / filename

df.to_csv(filename)

with open(filename, "rb") as in_file:

csv_data = in_file.read()

# print("data:text/csv;charset=utf-8,{}".format(csv_data)) --> not working since '\\n' is not the actual new line char anymore.

# print("data:text/plain;charset=utf-8,{}".format(csv_data)) # save the byte data as plain text

print(csv_data, '\n\n\n') # if the browser can not cover all character, we ll paste the data to .txt file directly

create_download_link(abnormal)

- STEP 2: Load the "data.txt" file, and convert it back to normal csv format file.

import re, csv, os

file_path = './datasets/all_prices.txt'

with open(file_path, "r") as f:

plain_str = f.read()

print(plain_str[:10], plain_str[-10:])

# remove byte traces

csv_data = re.match(r".*?b\'(.*)\'.*?", plain_str).group(1)

print("START:\n",csv_data[:250],"\nEND:", csv_data[-250:])

# remove \\n with actual new line chars

csv_data = csv_data.replace('\\n', '\n')

print("START:\n",csv_data[:250],"\nEND:", csv_data[-250:])

# update csv format to the filename

with open(os.path.splitext(file_path)[0] + '.csv', 'w') as out:

out.write(csv_data)

- STEP 3: Load the csv data as usual, we can also convert it to numpy array if we want.

# Read in the data

df = pd.read_csv('./datasets/data.csv', index_col=[0])

print(df.info())

display(df.tail())

new_np_arr = df.to_numpy()

print(new_np_arr.shape)

That's it. Thank you for reading. If you find the blog post useful, please give the GitHub blog repo a star to show your support and share it with others. Also, please let me know in the comments section of the post if you have any questions.