Winning a Kaggle Competition in Python - Part 3

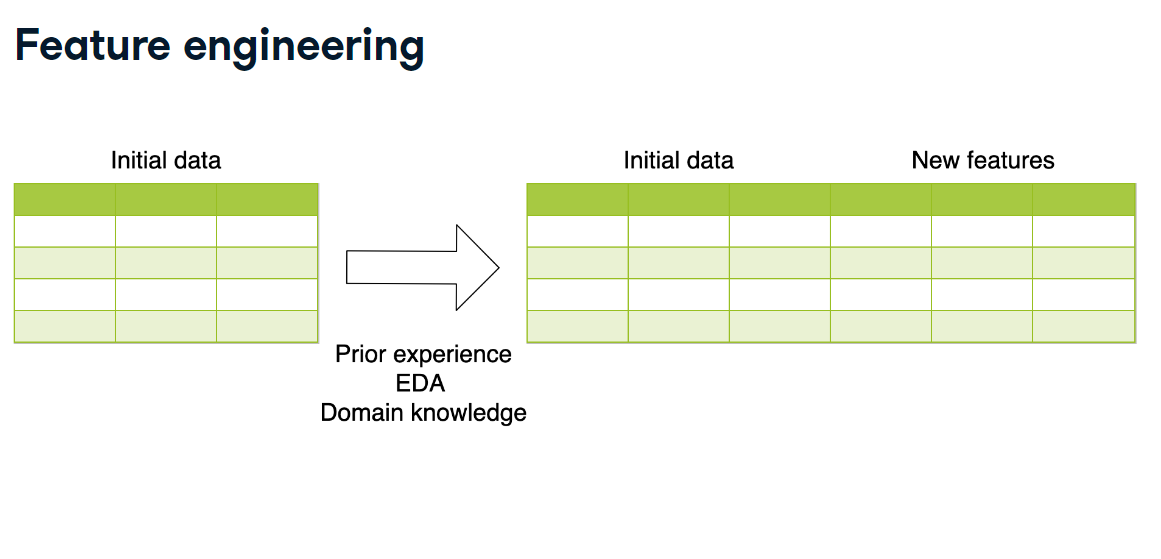

Feature Engineering - You will now get exposure to different types of features. You will modify existing features and create new ones. Also, you will treat the missing data accordingly.

import pandas as pd

import numpy as np

pd.set_option('display.expand_frame_repr', False)

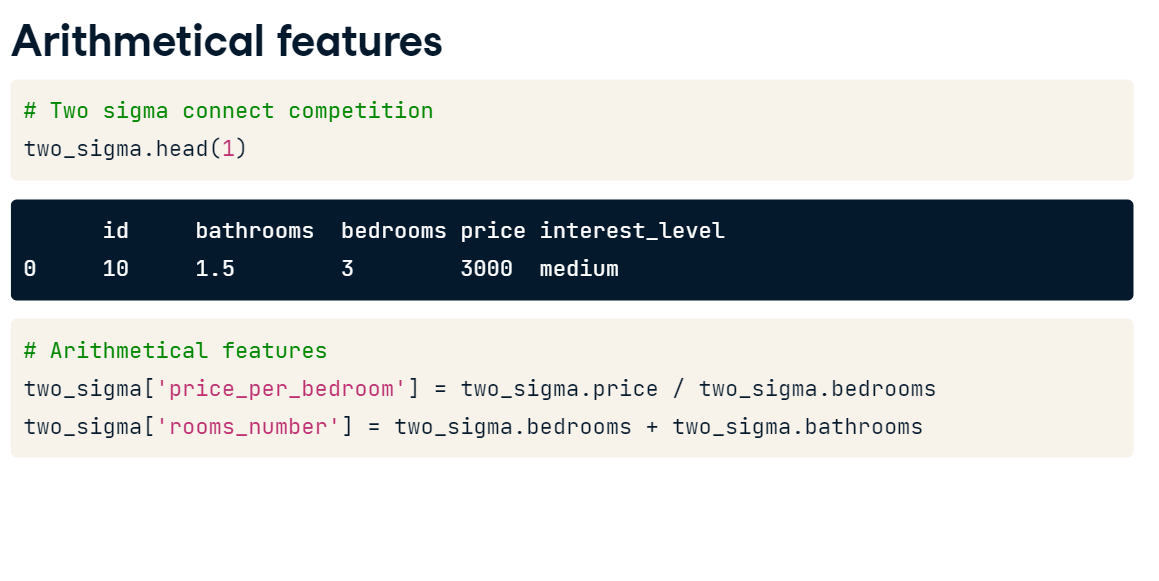

Arithmetical features

Arithmetical features (Exercise):

To practice creating new features, you will be working with a subsample from the Kaggle competition called "House Prices: Advanced Regression Techniques". The goal of this competition is to predict the price of the house based on its properties. It's a regression problem with Root Mean Squared Error as an evaluation metric.

Your goal is to create new features and determine whether they improve your validation score. To get the validation score from 5-fold cross-validation, you're given the get_kfold_rmse() function. Use it with the train DataFrame, available in your workspace, as an argument.

Instructions:

- Create a new feature representing the total area (basement, 1st and 2nd floors) of the house. The columns "TotalBsmtSF", "FirstFlrSF" and "SecondFlrSF" give the areas of the basement, 1st and 2nd floors, respectively.

train = pd.read_csv('./datasets/house_prices_train.csv')

test = pd.read_csv('./datasets/house_prices_test.csv')

from sklearn.ensemble import RandomForestRegressor

from sklearn.model_selection import KFold

from sklearn.metrics import mean_squared_error

import pandas as pd

import numpy as np

kf = KFold(n_splits=5, shuffle=True, random_state=123)

def get_kfold_rmse(train):

mse_scores = []

for train_index, test_index in kf.split(train):

train = train.fillna(0)

feats = [x for x in train.columns if x not in ['Id', 'SalePrice', 'RoofStyle', 'CentralAir']]

fold_train, fold_test = train.loc[train_index], train.loc[test_index]

# Fit the data and make predictions

# Create a Random Forest object

rf = RandomForestRegressor(n_estimators=10, min_samples_split=10, random_state=123)

# Train a model

rf.fit(X=fold_train[feats], y=fold_train['SalePrice'])

# Get predictions for the test set

pred = rf.predict(fold_test[feats])

fold_score = mean_squared_error(fold_test['SalePrice'], pred)

mse_scores.append(np.sqrt(fold_score))

return round(np.mean(mse_scores) + np.std(mse_scores), 2)

# Look at the initial RMSE

print('RMSE before feature engineering:', get_kfold_rmse(train))

# Find the total area of the house

train['TotalArea'] = train['TotalBsmtSF'] + train['1stFlrSF'] + train['2ndFlrSF']

print('RMSE with total area:', get_kfold_rmse(train))

# Find the area of the garden

train['GardenArea'] = train['LotArea'] - train['1stFlrSF']

print('RMSE with garden area:', get_kfold_rmse(train))

# Find total number of bathrooms

train['TotalBath'] = train["FullBath"] + train["HalfBath"]

print('RMSE with number of bathrooms:', get_kfold_rmse(train))

Nice! You've created three new features. Here you see that house area improved the RMSE by almost 1,000 USD. Adding garden area improved the RMSE by another 600. However, with the total number of bathrooms, the RMSE has increased. It means that you keep the new area features, but DO NOT ADD "TotalBath" as a new feature. Let's now work with the datetime features!

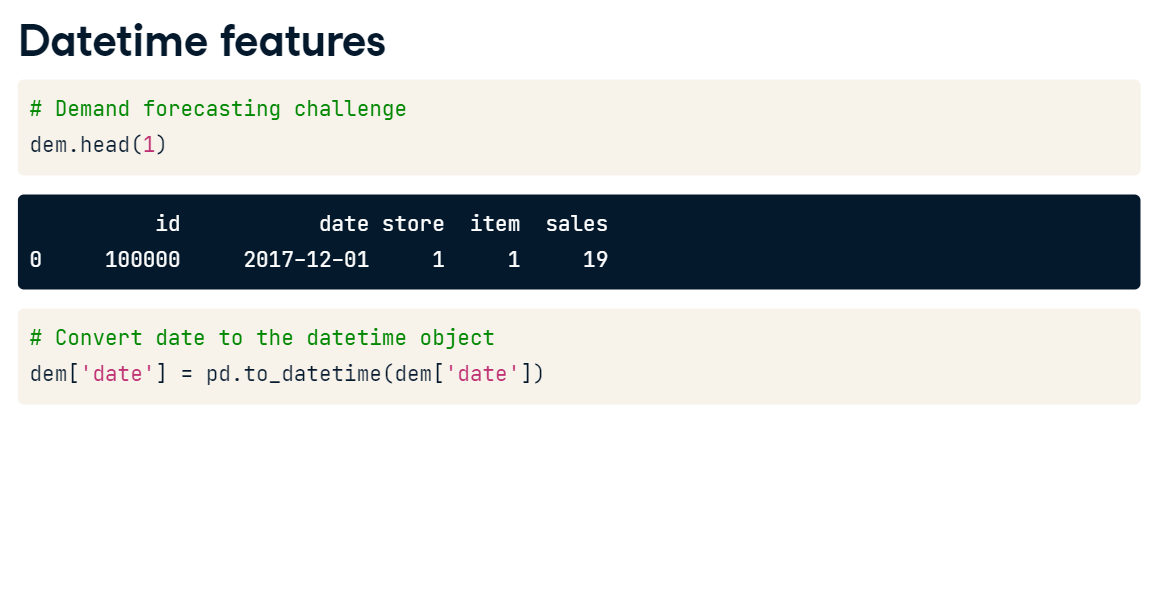

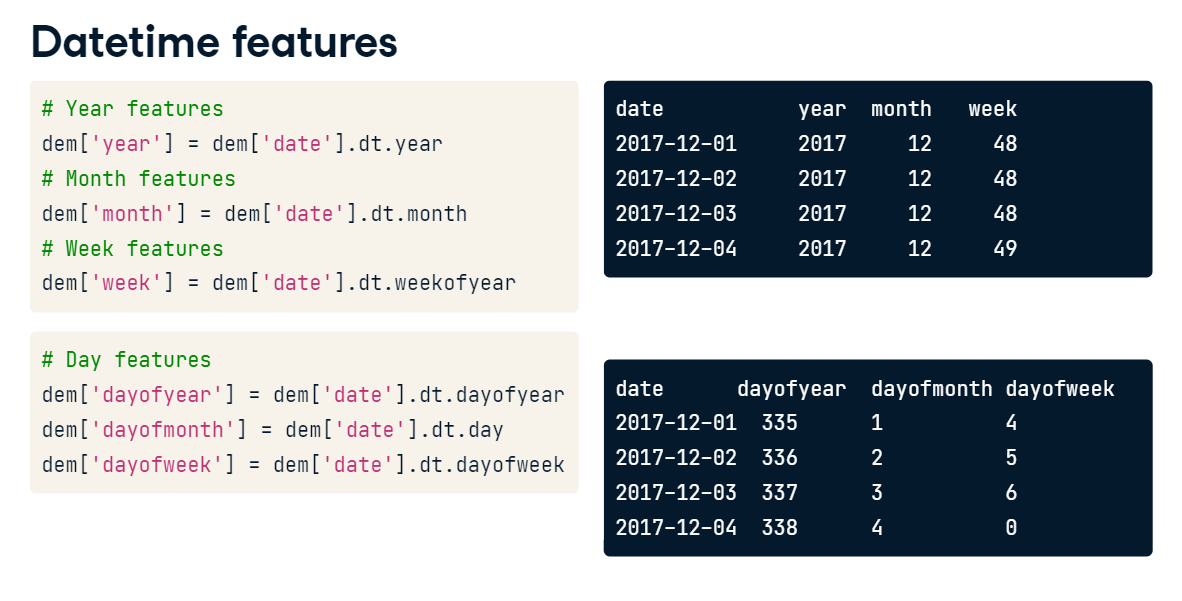

Date features (Exercise):

You've built some basic features using numerical variables. Now, it's time to create features based on date and time. You will practice on a subsample from the Taxi Fare Prediction Kaggle competition data. The data represents information about the taxi rides and the goal is to predict the price for each ride.

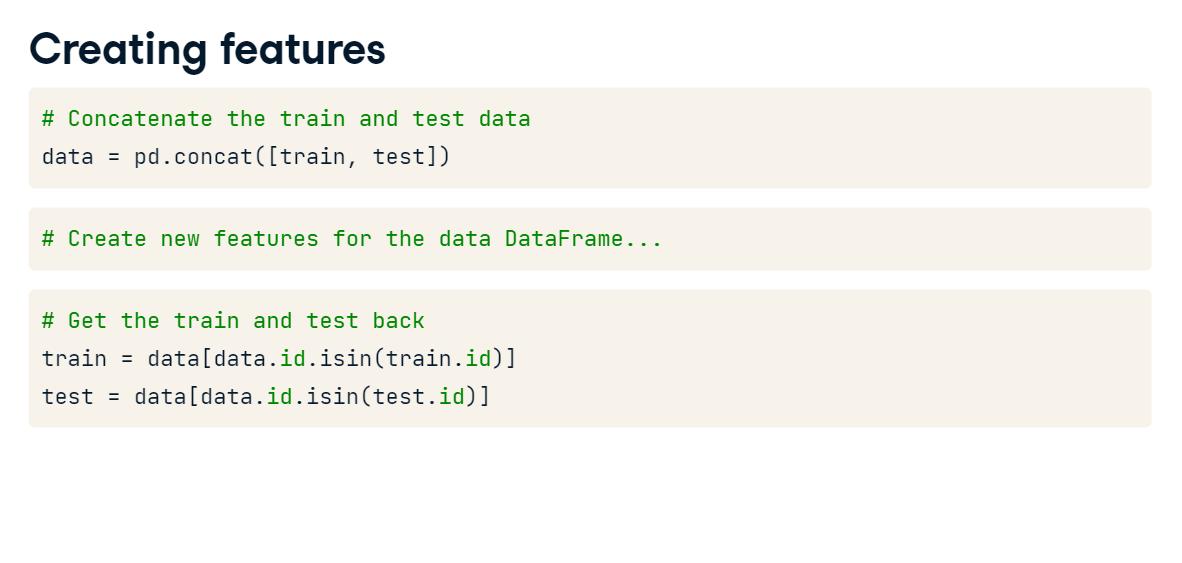

Your objective is to generate date features from the pickup datetime. Recall that it's better to create new features for train and test data simultaneously. After the features are created, split the data back into the train and test DataFrames. Here it's done using pandas' isin() method.

The train and test DataFrames are already available in your workspace.

Instructions:

- Concatenate the train and test DataFrames into a single DataFrame taxi.

- Convert the "pickup_datetime" column to a datetime object.

- Create the day of week (using .dayofweek attribute) and hour (using .hour attribute) features from the "pickup_datetime" column.

train = pd.read_csv('./datasets/taxi_train_chapter_4.csv')

test = pd.read_csv('./datasets/taxi_test_chapter_4.csv')

taxi = pd.concat([train, test])

# Convert pickup date to datetime object

taxi['pickup_datetime'] = pd.to_datetime(taxi['pickup_datetime'])

# Create a day of week feature

taxi['dayofweek'] = taxi['pickup_datetime'].dt.dayofweek

# Create an hour feature

taxi['hour'] = taxi['pickup_datetime'].dt.hour

# Split back into train and test

new_train = taxi[taxi['id'].isin(train['id'])]

new_test = taxi[taxi['id'].isin(test['id'])]

Great! Now you know how to perform feature engineering for train and test DataFrames simultaneously. Having considered numerical and datetime features, move forward to master feature engineering for categorical ones!

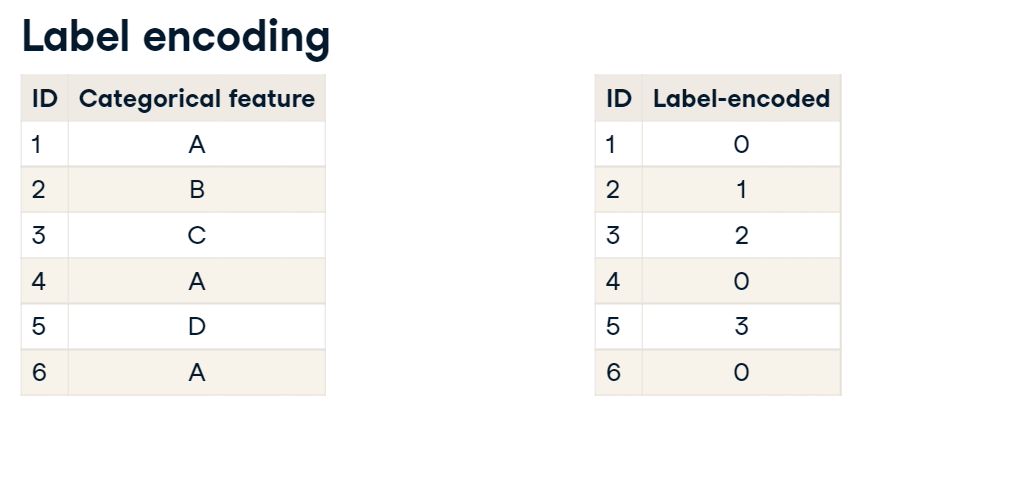

Encoding

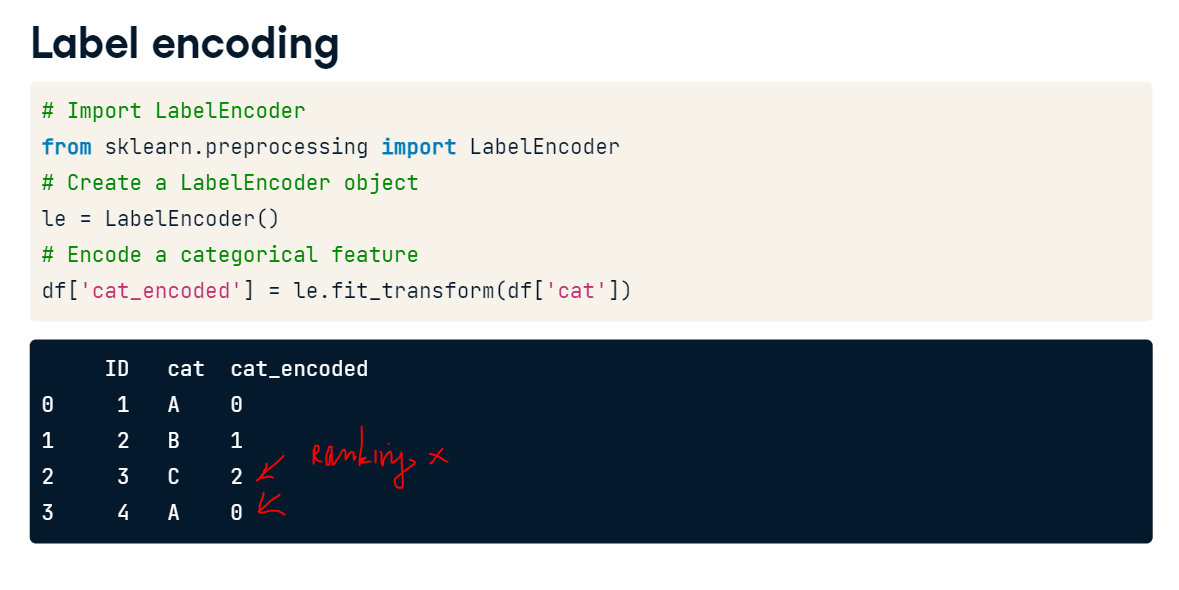

Label encoding

Label encoding (Exercise):

Let's work on categorical variables encoding. You will again work with a subsample from the House Prices Kaggle competition.

Your objective is to encode categorical features "RoofStyle" and "CentralAir" using label encoding. The train and test DataFrames are already available in your workspace.

Instructions:

- Concatenate train and test DataFrames into a single DataFrame houses.

- Create a LabelEncoder object without arguments and assign it to le.

- Create new label-encoded features for "RoofStyle" and "CentralAir" using the same le object.

train = pd.read_csv('./datasets/house_prices_train.csv')

test = pd.read_csv('./datasets/house_prices_test.csv')

houses = pd.concat([train, test])

# Label encoder

from sklearn.preprocessing import LabelEncoder

le = LabelEncoder()

# Create new features

houses['RoofStyle_enc'] = le.fit_transform(houses['RoofStyle'])

houses['CentralAir_enc'] = le.fit_transform(houses['CentralAir'])

# Look at new features

display(houses[['RoofStyle', 'RoofStyle_enc', 'CentralAir', 'CentralAir_enc']].head())

All right! You can see that categorical variables have been label encoded. However, as you already know, label encoder is not always a good choice for categorical variables. Let's go further and apply One-Hot encoding.

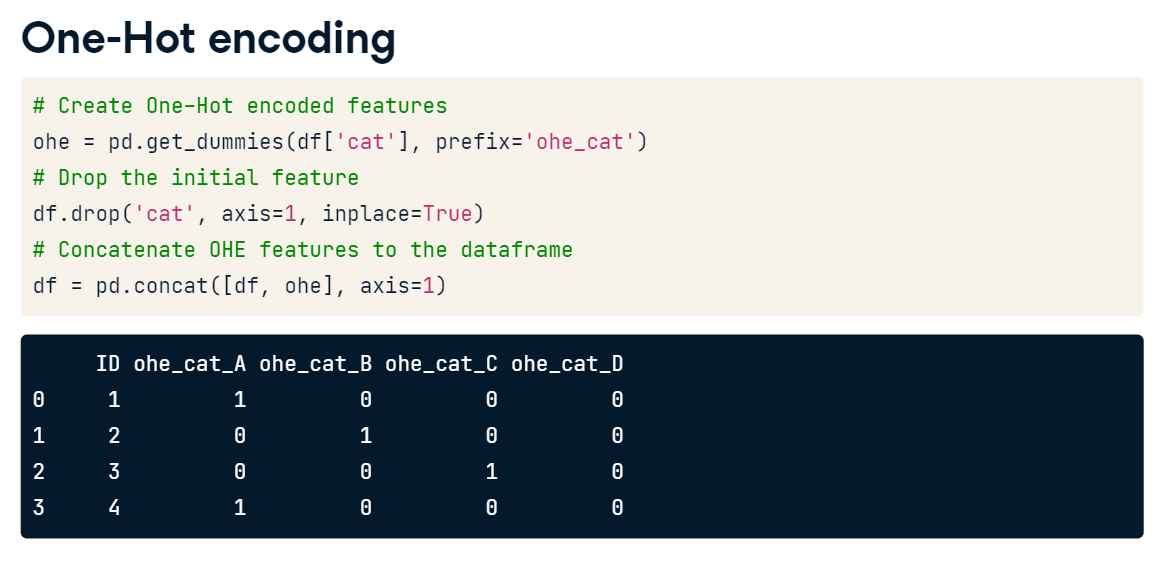

One-Hot encoding

One-Hot encoding (Exercise) The problem with label encoding is that it implicitly assumes that there is a ranking dependency between the categories. So, let's change the encoding method for the features "RoofStyle" and "CentralAir" to one-hot encoding. Again, the train and test DataFrames from House Prices Kaggle competition are already available in your workspace.

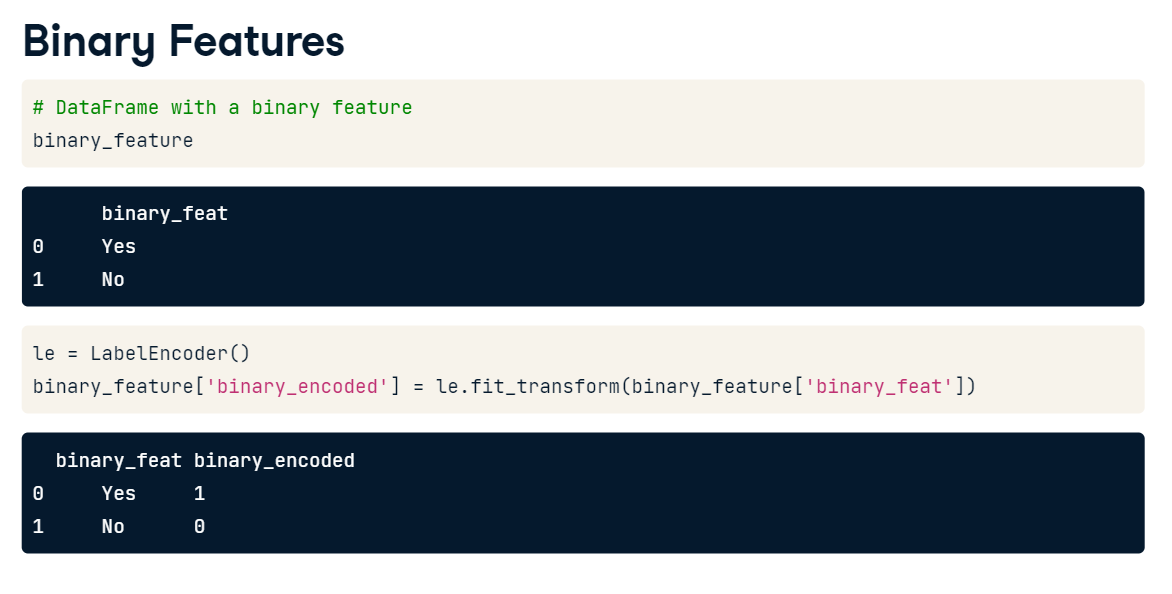

Recall that if you're dealing with binary features (categorical features with only two categories) it is suggested to apply label encoder only.

Your goal is to determine which of the mentioned features is not binary, and to apply one-hot encoding only to this one.

Instructions:

- Determine the distribution of "RoofStyle" and "CentralAir" features using pandas' value_counts() method.

- For the categorical feature "RoofStyle" let's use the one-hot encoder. Firstly, create one-hot encoded features using the get_dummies() method. Then they are concatenated to the initial houses DataFrame.

houses = pd.concat([train, test])

# Look at feature distributions

print(houses['RoofStyle'].value_counts(), '\n')

print(houses['CentralAir'].value_counts())

pd.set_option('display.expand_frame_repr', False)

# Concatenate train and test together

houses = pd.concat([train, test])

# Label encode binary 'CentralAir' feature

from sklearn.preprocessing import LabelEncoder

le = LabelEncoder()

houses['CentralAir_enc'] = le.fit_transform(houses['CentralAir'])

# Create One-Hot encoded features

ohe = pd.get_dummies(houses['RoofStyle'], prefix='RoofStyle')

# Concatenate OHE features to houses

houses = pd.concat([houses, ohe], axis=1)

# Look at OHE features

display(houses[[col for col in houses.columns if 'RoofStyle' in col]].head(3))

Question Which of the features is binary? ----> "CentralAir".

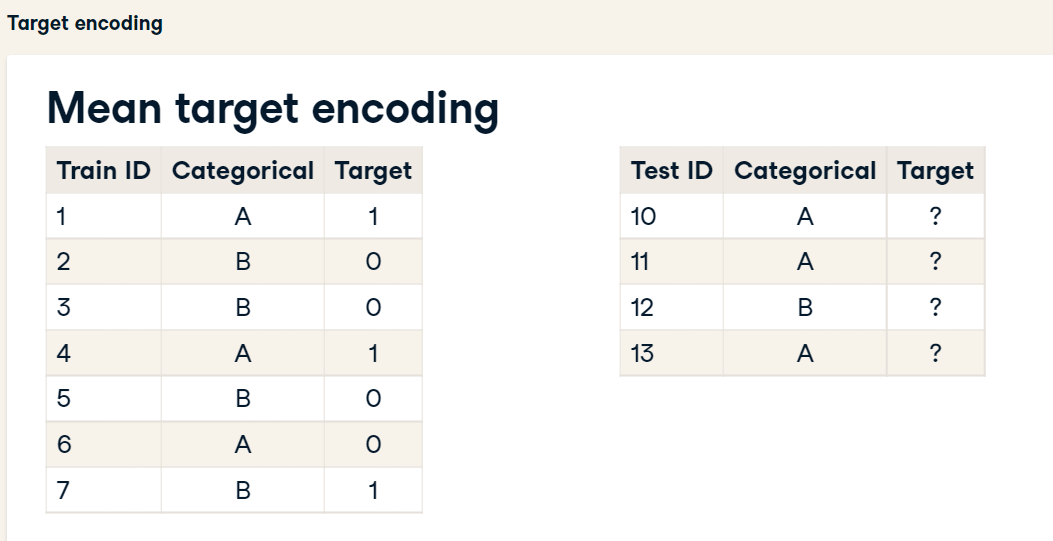

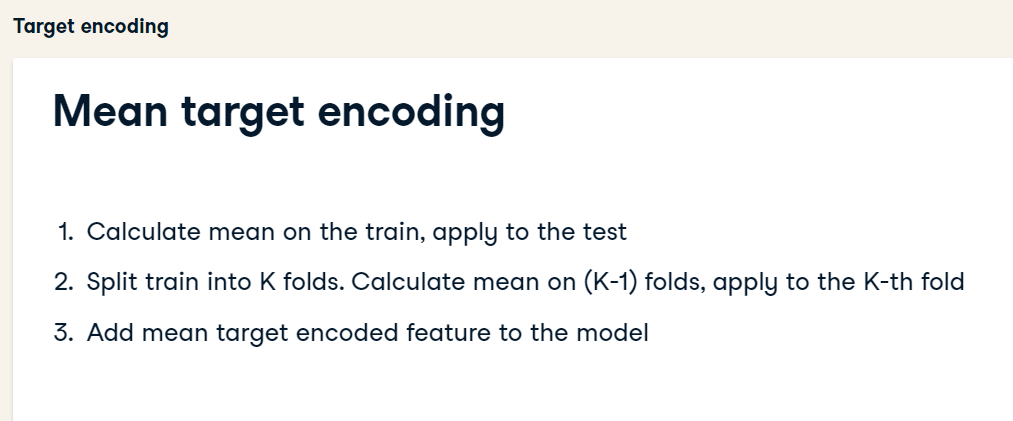

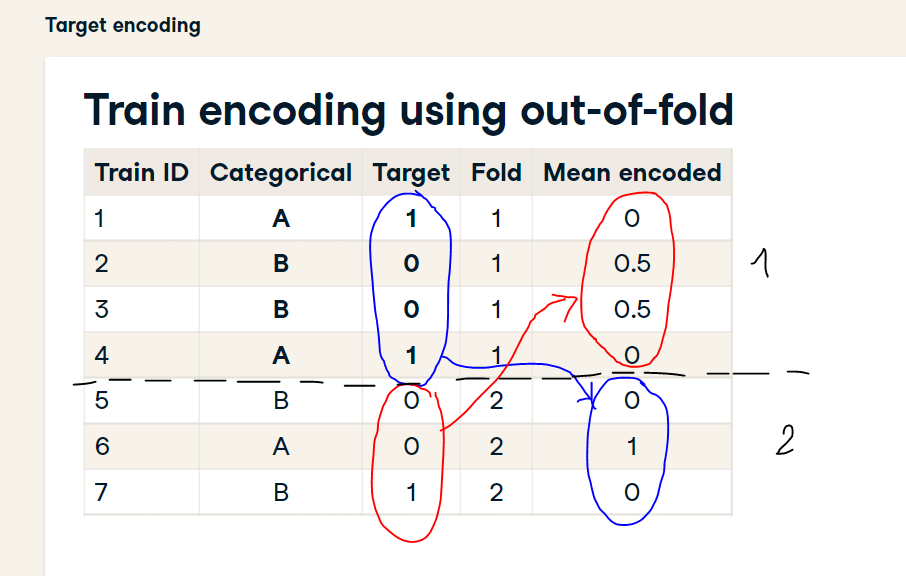

Mean target encoding

Mean target encoding (Exercise):

First of all, you will create a function that implements mean target encoding. Remember that you need to develop the two following steps:

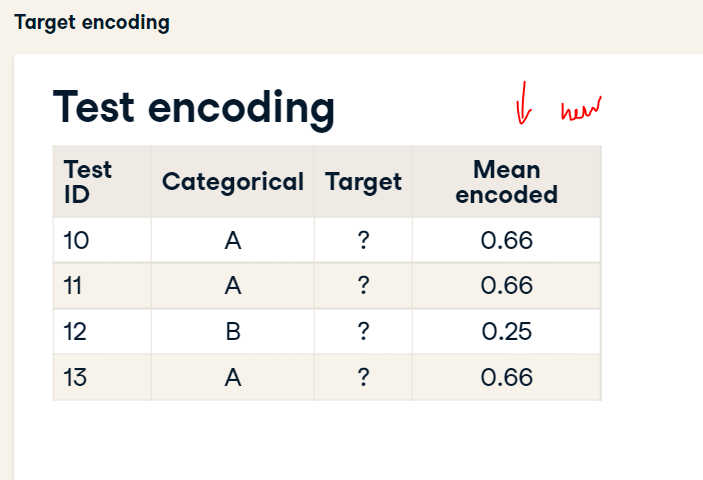

Calculate the mean on the train, apply to the test Split train into K folds. Calculate the out-of-fold mean for each fold, apply to this particular fold Each of these steps will be implemented in a separate function: test_mean_target_encoding() and train_mean_target_encoding(), respectively.

The final function mean_target_encoding() takes as arguments: the train and test DataFrames, the name of the categorical column to be encoded, the name of the target column and a smoothing parameter alpha. It returns two values: a new feature for train and test DataFrames, respectively.

Instructions:

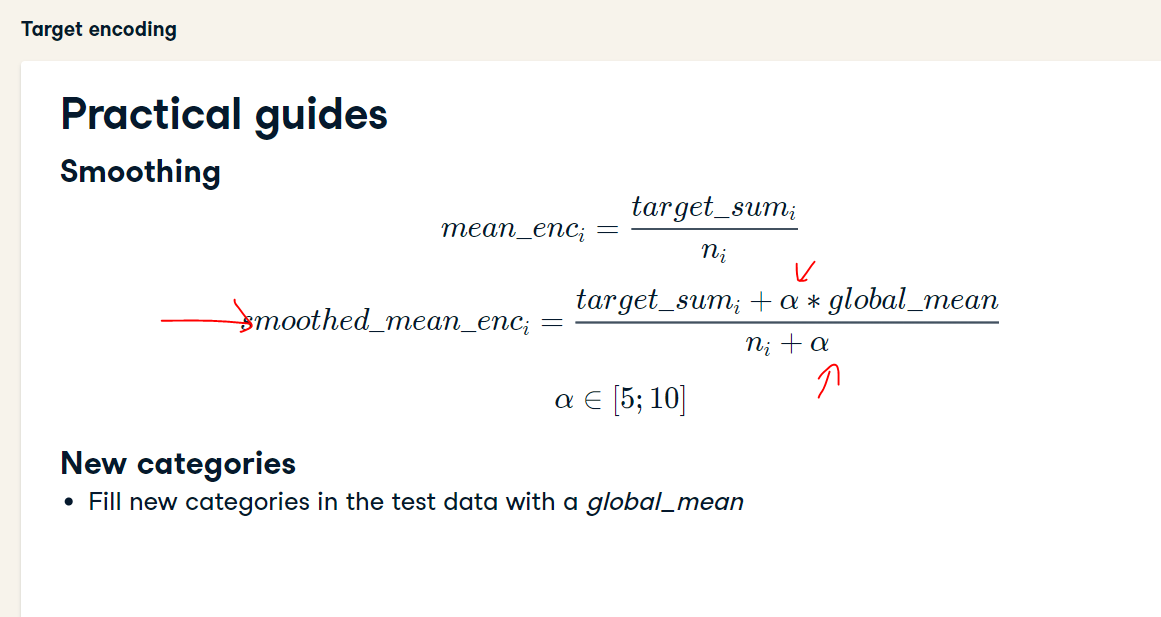

- You need to add smoothing to avoid overfitting. So, add parameter to the denominator in train_statistics calculations.

- You need to treat new categories in the test data. So, pass a global mean as an argument to the fillna() method.

def test_mean_target_encoding(train, test, target, categorical, alpha=5):

# Calculate global mean on the train data

global_mean = train[target].mean()

# Group by the categorical feature and calculate its properties

train_groups = train.groupby(categorical)

category_sum = train_groups[target].sum()

category_size = train_groups.size()

# Calculate smoothed mean target statistics

train_statistics = (category_sum + global_mean * alpha) / (category_size + alpha)

# Apply statistics to the test data and fill new categories

test_feature = test[categorical].map(train_statistics).fillna(global_mean)

return test_feature.values

- To calculate the train mean encoded feature you need to use out-of-fold statistics, splitting train into several folds. Specify the train and test indices for each validation split to access it.

def train_mean_target_encoding(train, target, categorical, alpha=5):

# Create 5-fold cross-validation

kf = KFold(n_splits=5, random_state=123, shuffle=True)

train_feature = pd.Series(index=train.index, dtype='float')

# For each folds split

for train_index, test_index in kf.split(train):

cv_train, cv_test = train.iloc[train_index], train.iloc[test_index]

# Calculate out-of-fold statistics and apply to cv_test

cv_test_feature = test_mean_target_encoding(cv_train, cv_test, target, categorical, alpha)

# Save new feature for this particular fold

train_feature.iloc[test_index] = cv_test_feature

return train_feature.values

- Finally, you just calculate train and test target mean encoded features and return them from the function. So, return train_feature and test_feature obtained.

def mean_target_encoding(train, test, target, categorical, alpha=5):

# Get the train feature

train_feature = train_mean_target_encoding(train, target, categorical, alpha)

# Get the test feature

test_feature = test_mean_target_encoding(train, test, target, categorical, alpha)

# Return new features to add to the model

return train_feature, test_feature

Now you are equipped with a function that performs mean target encoding of any categorical feature. Move on to learn how to implement mean target encoding for the K-fold cross-validation using the mean_target_encoding() function you've just built!

Cross Validation

K-fold cross-validation

K-fold cross-validation (Exercise)

You will work with a binary classification problem on a subsample from Kaggle playground competition. The objective of this competition is to predict whether a famous basketball player Kobe Bryant scored a basket or missed a particular shot.

Train data is available in your workspace as bryant_shots DataFrame. It contains data on 10,000 shots with its properties and a target variable "shot_made_flag" -- whether shot was scored or not.

One of the features in the data is "game_id" -- a particular game where the shot was made. There are 541 distinct games. So, you deal with a high-cardinality categorical feature. Let's encode it using a target mean!

Suppose you're using 5-fold cross-validation and want to evaluate a mean target encoded feature on the local validation.

Instructions:

- To achieve this, you need to repeat encoding procedure for the "game_id" categorical feature inside each folds split separately. Your goal is to specify all the missing parameters for the mean_target_encoding() function call inside each folds split.

- Recall that the train and test parameters expect the train and test DataFrames.

- While the target and categorical parameters expect names of the target variable and categorical feature to be encoded.

bryant_shots = pd.read_csv('./datasets/bryant_shots.csv')

display(bryant_shots.head())

display(bryant_shots.describe())

display(bryant_shots.info())

print(len(bryant_shots.game_id.unique()))

kf = KFold(n_splits=5, random_state=123, shuffle=True)

# For each folds split

for train_index, test_index in kf.split(bryant_shots):

cv_train, cv_test = bryant_shots.iloc[train_index].copy(), bryant_shots.iloc[test_index].copy()

# Create mean target encoded feature

cv_train['game_id_enc'], cv_test['game_id_enc'] = mean_target_encoding(train=cv_train,

test=cv_test,

target='shot_made_flag',

categorical='game_id',

alpha=5)

# Look at the encoding

display(cv_train[['game_id', 'shot_made_flag', 'game_id_enc']].sample(n=1))

You could see different game encodings for each validation split in the output. The main conclusion you should make: while using local cross-validation, you need to repeat mean target encoding procedure inside each folds split separately. Go on to try other problem types beyond binary classification!

Beyond binary classification

Beyond binary classification (Exercise)

Of course, binary classification is just a single special case. Target encoding could be applied to any target variable type:

For binary classification usually mean target encoding is used For regression mean could be changed to median, quartiles, etc. For multi-class classification with N classes we create N features with target mean for each category in one vs. all fashion The mean_target_encoding() function you've created could be used for any target type specified above. Let's apply it for the regression problem on the example of House Prices Kaggle competition.

Your goal is to encode a categorical feature "RoofStyle" using mean target encoding. The train and test DataFrames are already available in your workspace.

Instructions:

- Specify all the missing parameters for the mean_target_encoding() function call. Target variable name is "SalePrice". Set hyperparameter to 10.

- Recall that the train and test parameters expect the train and test DataFrames.

- While the target and categorical parameters expect names of the target variable and feature to be encoded.

train = pd.read_csv('./datasets/house_prices_train.csv')

test = pd.read_csv('./datasets/house_prices_test.csv')

# Create mean target encoded feature

train['RoofStyle_enc'], test['RoofStyle_enc'] = mean_target_encoding(train=train,

test=test,

target='SalePrice',

categorical='RoofStyle',

alpha=10)

# Look at the encoding

test[['RoofStyle', 'RoofStyle_enc']].drop_duplicates()

So, you observe that houses with the Hip roof are the most pricy, while houses with the Gambrel roof are the cheapest. It's exactly the goal of target encoding: you've encoded categorical feature in such a manner that there is now a correlation between category values and target variable. We're done with categorical encoders. Now it's time to talk about the missing data!

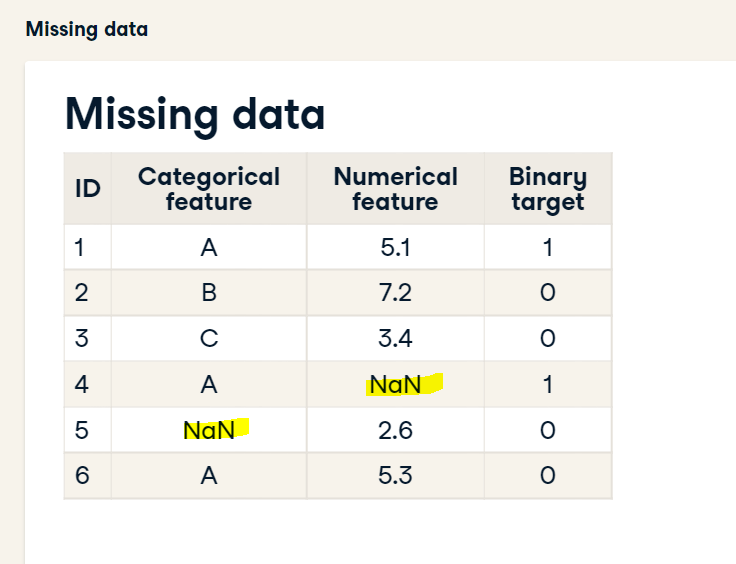

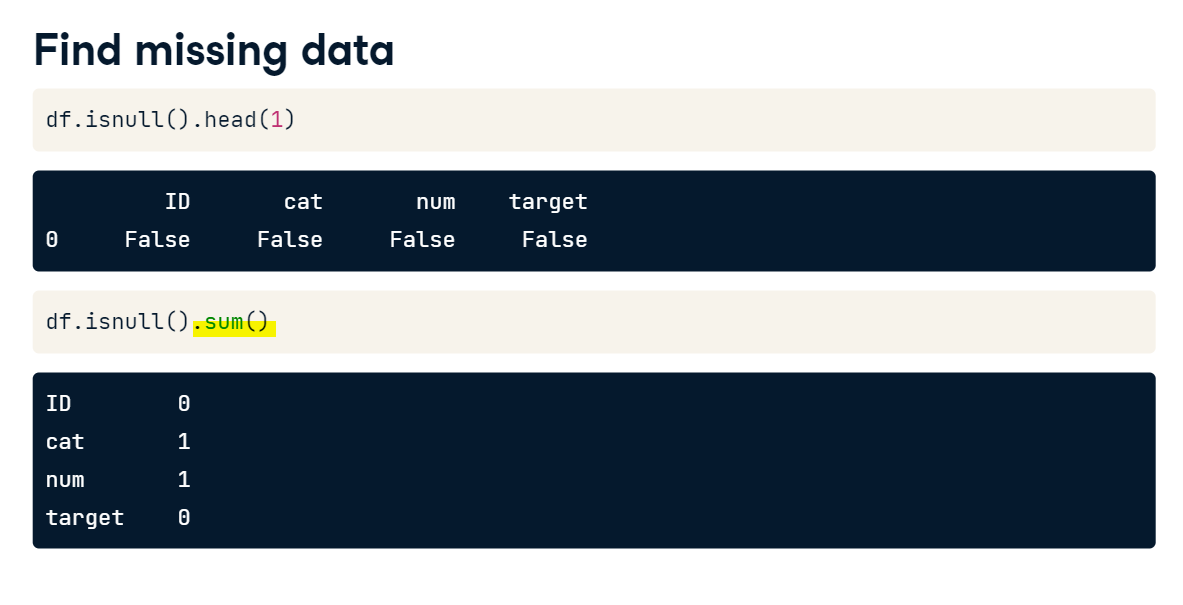

Find missing data (Exercise):

Let's impute missing data on a real Kaggle dataset. For this purpose, you will be using a data subsample from the Kaggle "Two sigma connect: rental listing inquiries" competition.

Before proceeding with any imputing you need to know the number of missing values for each of the features. Moreover, if the feature has missing values, you should explore the type of this feature.

Instructions:

- Read the "twosigma_train.csv" file using pandas.

- Find the number of missing values in each column.

- Select the columns with the missing values and look at the head of the DataFrame.

twosigma = pd.read_csv('./datasets/twosigma_rental_train_null.csv')

# Find the number of missing values in each column

print(twosigma.isnull().sum())

# Look at the columns with the missing values

print(twosigma[['building_id', 'price']].head())

All right, you've found out that 'building_id' and 'price' columns have missing values. Looking at the head of the DataFrame, we may conclude that 'price' is a numerical feature, while 'building_id' is a categorical feature that is encoding buildings as hashes.

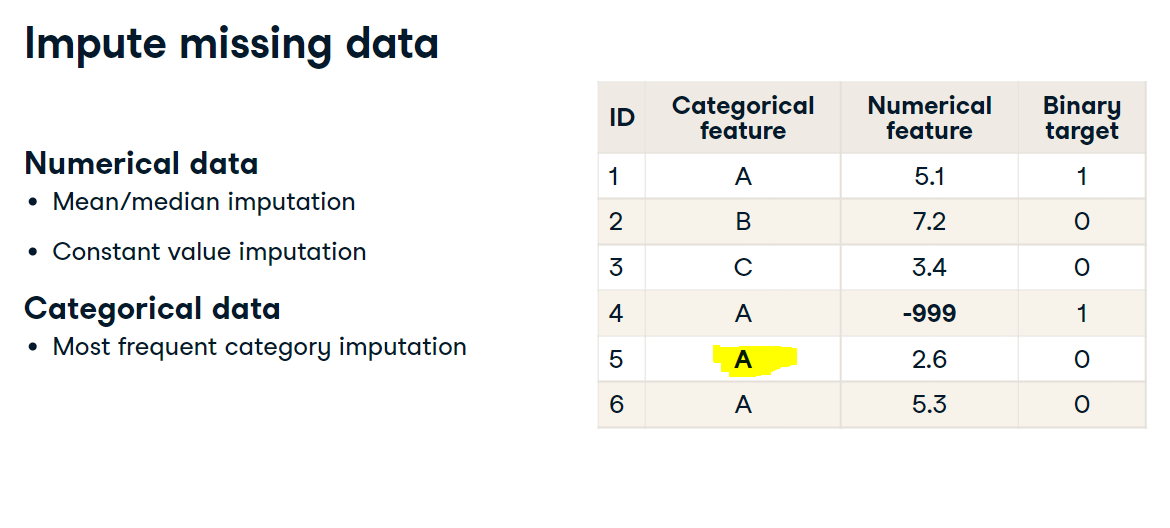

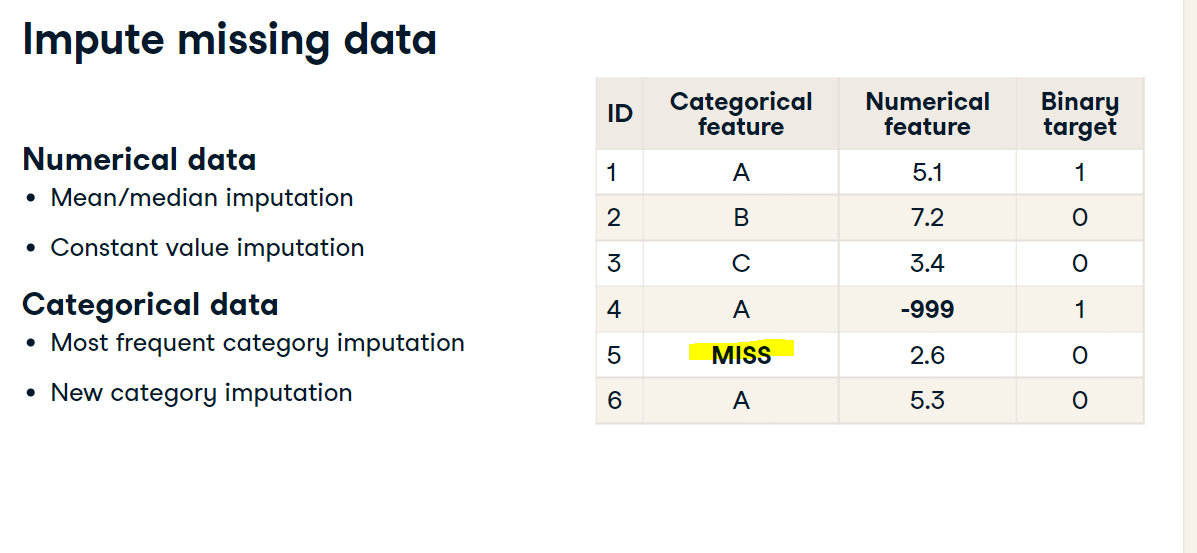

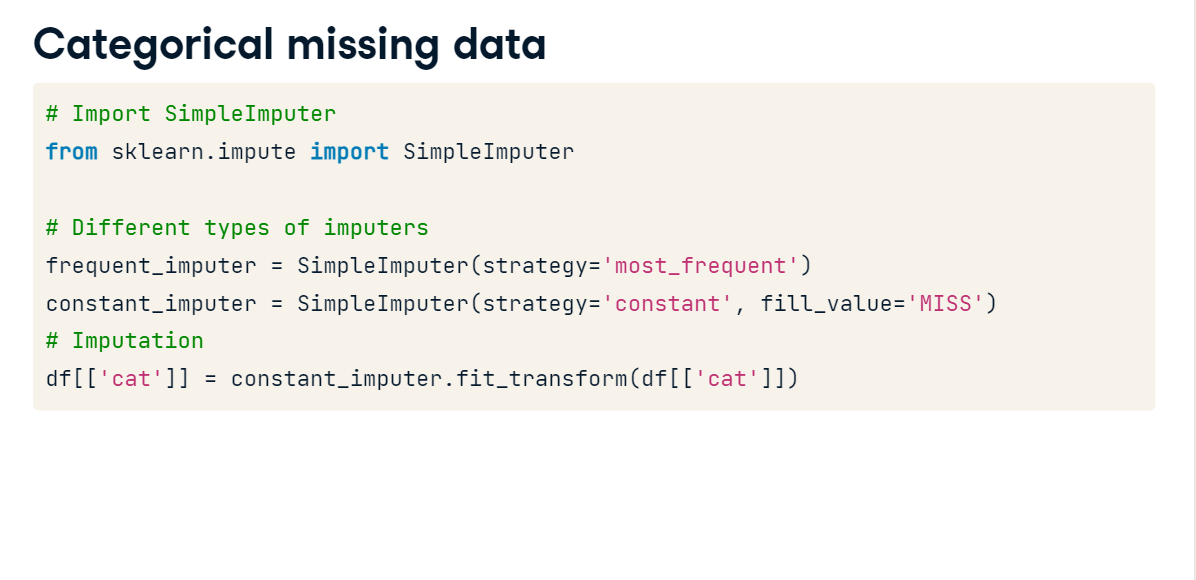

Imputing Missing Data

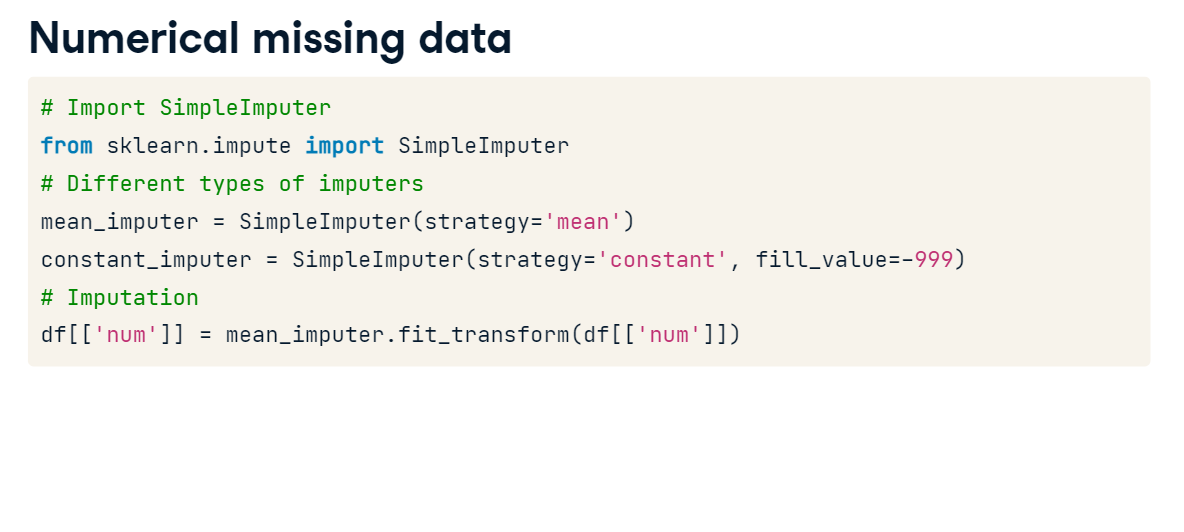

Impute missing data (Exercise)

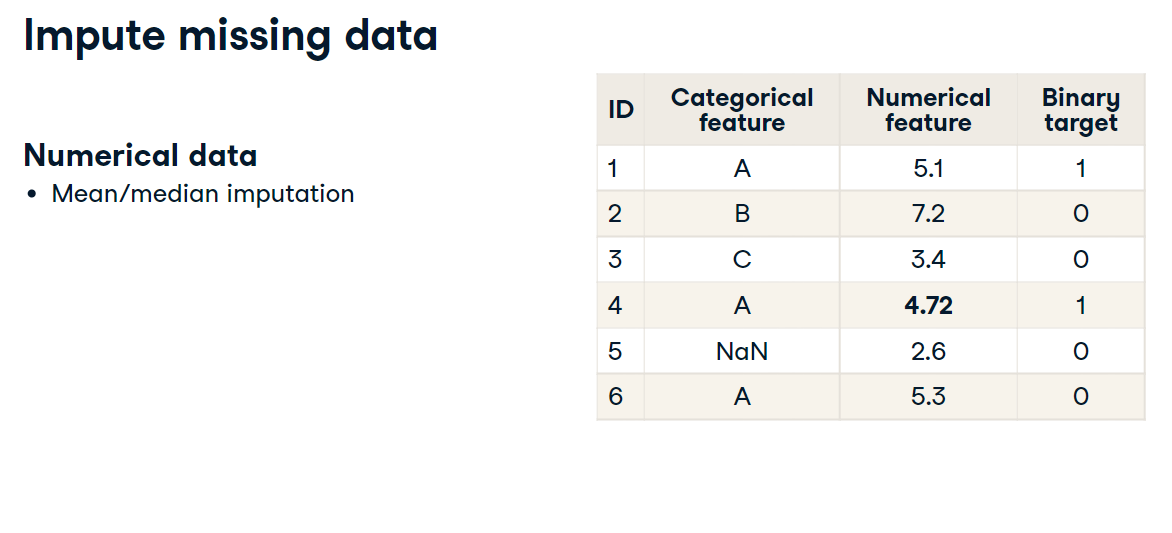

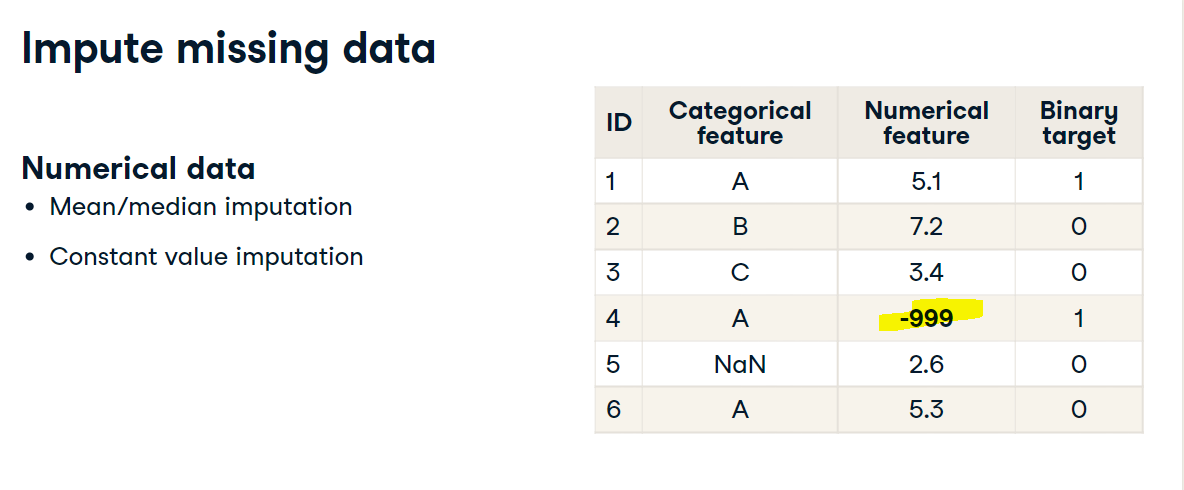

You've found that "price" and "building_id" columns have missing values in the Rental Listing Inquiries dataset. So, before passing the data to the models you need to impute these values.

Numerical feature "price" will be encoded with a mean value of non-missing prices.

Imputing categorical feature "building_id" with the most frequent category is a bad idea, because it would mean that all the apartments with a missing "building_id" are located in the most popular building. The better idea is to impute it with a new category.

The DataFrame rental_listings with competition data is read for you.

Instructions:

- Create a SimpleImputer object with "mean" strategy.

Impute missing prices with the mean value.

Create an imputer with "constant" strategy. Use "MISSING" as fill_value.

- Impute missing buildings with a constant value.

from sklearn.impute import SimpleImputer

rental_listings = twosigma.copy()

# Create mean imputer

mean_imputer = SimpleImputer(strategy='mean')

# Price imputation

rental_listings[['price']] = mean_imputer.fit_transform(rental_listings[['price']])

# Create constant imputer

constant_imputer = SimpleImputer(strategy='constant', fill_value="MISSING")

# building_id imputation

rental_listings[['building_id']] = constant_imputer.fit_transform(rental_listings[['building_id']])

print(rental_listings.isnull().sum())

Nice! Now our data is ready to be passed to any Machine Learning model. Move on to the next chapter to build and improve your models!