Extract Stock Sentiment from News Headlines (Datacamp project)

You will explore the market capitalization of Bitcoin and other cryptocurrencies.

Searching for gold inside HTML files

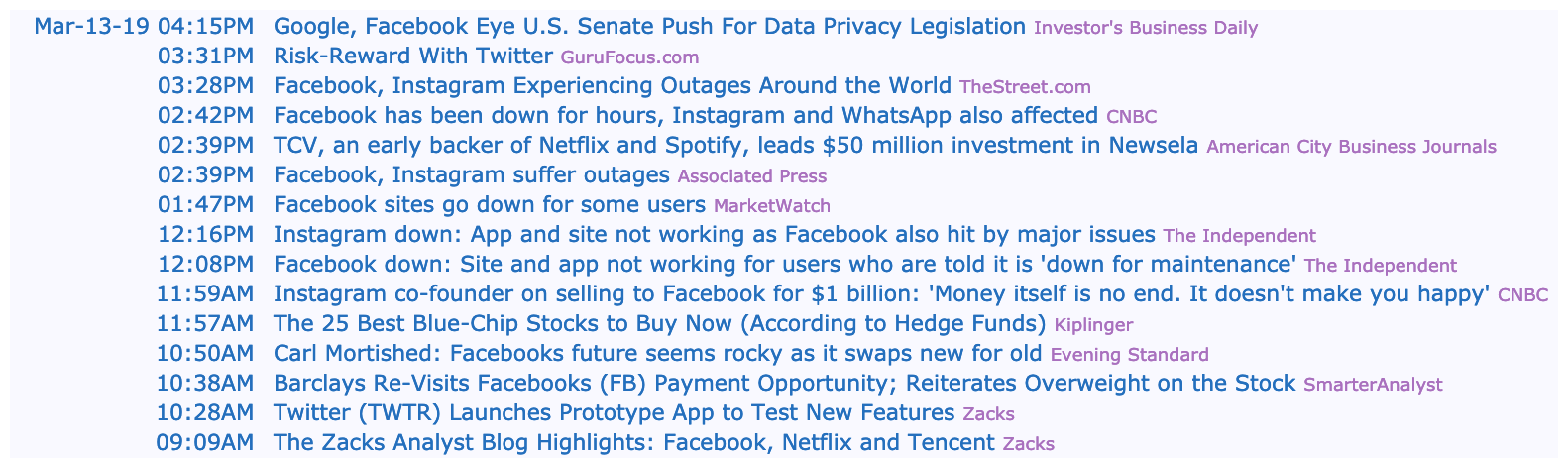

It used to take days for financial news to spread via radio, newspapers, and word of mouth. Now, in the age of the internet, it takes seconds. Did you know news articles are automatically being generated from figures and earnings call streams? Hedge funds and independent traders are using data science to process this wealth of information in the quest for profit.

In this notebook, we will generate investing insight by applying sentiment analysis on financial news headlines from FINVIZ.com. Using this natural language processing technique, we can understand the emotion behind the headlines and predict whether the market feels good or bad about a stock. It would then be possible to make educated guesses on how certain stocks will perform and trade accordingly. (And hopefully, make money!)

Why headlines? And why from FINVIZ?

- Headlines, which have similar length, are easier to parse and group than full articles, which vary in length.

- FINVIZ has a list of trusted websites, and headlines from these sites tend to be more consistent in their jargon than those from independent bloggers. Consistent textual patterns will improve the sentiment analysis.

As web scraping requires data science ethics (sending a lot of traffic to a FINVIZ's servers isn't very nice), the HTML files for Facebook and Tesla at various points in time have been downloaded. Let's import these files into memory.

Disclaimer: Investing in the stock market involves risk and can lead to monetary loss. The content in this notebook is not to be taken as financial advice.

from bs4 import BeautifulSoup

import os

html_tables = {}

# For every table in the datasets folder...

for table_name in os.listdir('datasets'):

#this is the path to the file. Don't touch!

table_path = f'datasets/{table_name}'

# Open as a python file in read-only mode

with open(table_path, 'r') as table_file:

# Read the contents of the file into 'html'

html = BeautifulSoup(table_file.read(),'html')

# print(html.prettify())

# print(html.find(id='news-table'))

# Find 'news-table' in the Soup and load it into 'html_table'

html_table = html.find(id='news-table')

print(html_table)

# Add the table to our dictionary

html_tables[table_name] = html_table

What is inside those files anyway?

We've grabbed the table that contains the headlines from each stock's HTML file, but before we start parsing those tables further, we need to understand how the data in that table is structured. We have a few options for this:

- Open the HTML file with a text editor (preferably one with syntax highlighting, like Sublime Text) and explore it there

- Use your browser's webdev toolkit to explore the HTML

- Explore the headlines table here in this notebook!

Let's do the third option.

tsla = html_tables['tsla_22sep.html']

# Get all the table rows tagged in HTML with <tr> into 'tesla_tr'

tsla_tr = tsla.findAll('tr')

# For each row...

for i, table_row in enumerate(tsla_tr):

# Read the text of the element 'a' into 'link_text'

link_text = table_row.a.get_text()

# Read the text of the element 'td' into 'data_text'

data_text = table_row.td.get_text()

# Print the count

print(f'File number {i+1}:')

# Print the contents of 'link_text' and 'data_text'

print(link_text)

print(data_text)

# The following exits the loop after four rows to prevent spamming the notebook, do not touch

if i == 3:

break

parsed_news = []

# Iterate through the news

for file_name, news_table in html_tables.items():

# Iterate through all tr tags in 'news_table'

for x in news_table.findAll('tr'):

# Read the text from the tr tag into text

text = x.get_text()

# Split the text in the td tag into a list

date_scrape = x.td.text.split()

# If the length of 'date_scrape' is 1, load 'time' as the only element

# If not, load 'date' as the 1st element and 'time' as the second

if len(date_scrape) == 1:

time = date_scrape[0]

else:

date = date_scrape[0]

time = date_scrape[1]

# time = date_scrape[1]

# print(date,'\n')

# Extracting the headline from tr tag

headline = x.a.get_text()

# Extract the ticker from the file name, get the string up to the 1st '_'

ticker = file_name.split('_')[0]

# Append ticker, date, time and headline as a list to the 'parsed_news' list

parsed_news.append([ticker, date, time , headline ])

Make NLTK think like a financial journalist

Sentiment analysis is very sensitive to context. As an example, saying "This is so addictive!" often means something positive if the context is a video game you are enjoying with your friends, but it very often means something negative when we are talking about opioids. Remember that the reason we chose headlines is so we can try to extract sentiment from financial journalists, who like most professionals, have their own lingo. Let's now make NLTK think like a financial journalist by adding some new words and sentiment values to our lexicon.

from nltk.sentiment.vader import SentimentIntensityAnalyzer

# New words and values

new_words = {

'crushes': 10,

'beats': 5,

'misses': -5,

'trouble': -10,

'falls': -100,

}

# Instantiate the sentiment intensity analyzer with the existing lexicon

vader = SentimentIntensityAnalyzer()

# Update the lexicon

# ... YOUR CODE FOR TASK 4 ...

vader.lexicon.update(new_words)

BREAKING NEWS: NLTK Crushes Sentiment Estimates

Now that we have the data and the algorithm loaded, we will get to the core of the matter: programmatically predicting sentiment out of news headlines! Luckily for us, VADER is very high level so, in this case, we will not adjust the model further* other than the lexicon additions from before.

*VADER "out-of-the-box" with some extra lexicon would likely translate into heavy losses with real money. A real sentiment analysis tool with chances of being profitable will require a very extensive and dedicated to finance news lexicon. Furthermore, it might also not be enough using a pre-packaged model like VADER.

import pandas as pd

# Use these column names

columns = ['ticker', 'date', 'time', 'headline']

# Convert the list of lists into a DataFrame

scored_news = pd.DataFrame(parsed_news, columns = columns)

print(scored_news.head())

# Iterate through the headlines and get the polarity scores

scores = scored_news['headline'].apply(vader.polarity_scores)

# Convert the list of dicts into a DataFrame (use pd.DataFrame.from_records since noremal pd.Dataframe or .fromdict didnt work)

scores_df = pd.DataFrame.from_records(scores)

# Join the DataFrames

scored_news = scored_news.join(scores_df)

# Convert the date column from string to datetime

scored_news['date'] = pd.to_datetime(scored_news.date).dt.date

import matplotlib.pyplot as plt

plt.style.use("fivethirtyeight")

%matplotlib inline

# Group by date and ticker columns from scored_news and calculate the mean

mean_c = scored_news.groupby(["date","ticker"]).mean()

# # Unstack the column ticker

mean_c = mean_c.unstack('ticker')

# Get the cross-section of compound in the 'columns' axis

mean_c =mean_c.xs('compound', axis = 'columns')

# # Plot a bar chart with pandas

# # ... YOUR CODE FOR TASK 6 ...

mean_c.plot.bar(figsize = (10,6))

Weekends and duplicates

What happened to Tesla on November 22nd? Since we happen to have the headlines inside our DataFrame, a quick peek reveals that there are a few problems with that particular day:

- There are only 5 headlines for that day.

- Two headlines are verbatim the same as another but from another news outlet.

Let's clean up the dataset a bit, but not too much! While some headlines are the same news piece from different sources, the fact that they are written differently could provide different perspectives on the same story. Plus, when one piece of news is more important, it tends to get more headlines from multiple sources. What we want to get rid of is verbatim copied headlines, as these are very likely coming from the same journalist and are just being "forwarded" around, so to speak.

num_news_before = scored_news['headline'].count()

# Drop duplicates based on ticker and headline

scored_news_clean = scored_news.drop_duplicates(subset=['ticker', 'headline'], inplace=False)

# Count number of headlines after dropping duplicates

num_news_after = scored_news_clean['headline'].count()

# # Print before and after numbers to get an idea of how we did

f"Before we had {num_news_before} headlines, now we have {num_news_after}"

single_day = scored_news_clean.set_index(['ticker', 'date'])

# Cross-section the fb row

single_day = single_day.xs('fb')

print(single_day.info())

# Select the 3rd of January of 2019

single_day = single_day.loc['2019-01-03']

# # Convert the datetime string to just the time

single_day['time'] = pd.to_datetime(single_day['time']).dt.time

# Set the index to time and

single_day = single_day.set_index('time')

# Sort it

single_day = single_day.sort_index()

print(single_day.head)

TITLE = "Negative, neutral, and positive sentiment for FB on 2019-01-03"

COLORS = ["red","orange", "green"]

# Drop the columns that aren't useful for the plot

plot_day = single_day.drop(['compound', 'headline'], axis = 1)

# Change the column names to 'negative', 'positive', and 'neutral'

plot_day.columns = ['negative', 'neutral','positive']

# Plot a stacked bar chart

# ... YOUR CODE FOR TASK 9 :-) ...

plot_day.plot.bar(stacked = True,

figsize=(10, 6),

title=TITLE,

colors = COLORS).legend(bbox_to_anchor=(1.2, 0.5))

plt.ylabel("scores")